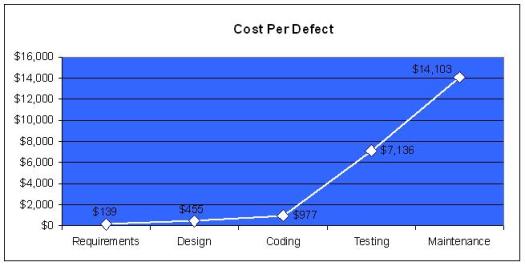

I recall from my early consulting days a graphic that was often hauled out at management meetings and retrospectives. It looked something like this:

I’ve often wondered the source of this wisdom and discovered after some research that it comes from Capers Jones, Software Assessments, Benchmarks, and Best Practices, Addison-Wesley, 2000. Yep, Capers calculated this out to the dollar for us; although, in my memory similar graphics have been used at least since 1990.

In pondering the graph, what should one conclude? Search Google, and top results use it to justify the investment of extra time, effort, and capital in fixing errors during the early tasks of requirements, design, and coding. Spend enough in these tasks and a reasonable conclusion is a savings of $2,276,700 on a typical project.

What if, though, we assumed instead of phases on the horizontal axis, it merely represented time? The conclusion would be quite different. If we could complete requirements, design, coding, testing and acceptance in the time typically taken for requirements alone, we would never pay 10 times the starting cost to fix a defect. Instead of saving money, added time in the single task of development almost guarantees higher costs of correcting defects later in the time line.

I’d like to do the scholarly research of Capers around this to get empirical data. In the absence of that, using anecdotal evidence from applying agile to software development for the last eight years, I feel much more comfortable with this model. I’ve been on very long projects where early assumptions proved wrong or changed because different interest groups looked at them later. I’ve experienced long requirements cycles that delayed introduction of software only to find out that key features were missing. With agile, development happens across all phases more quickly and these problems are largely alleviated.

Thus, my second “law of agile physics”: The cost of correcting a defect rises exponentially with the time taken to identify the defect. Or more generally, bad news does not age well. (The first law is coming next.)

Capers Jones “Software Assessments, Benchmarks, and Best Practices”? Are you sure that you are referencing the correct Capers book? I cannot find these details in this book.

LikeLike

Bummer that my link to the reference article is no longer available. Here’s a quick reference to the work Jones did while at IBM on Relative Costs to Fix Software Defects: http://software.isixsigma.com/library/content/c060719b.asp

I have also seen this table linked to Jones’ earlier work, Programming Productivity (1986, New York: McGraw-Hill.)

I’ll keep looking to see whether I can find the original article with the reference.

LikeLike

After more detective work, Ron has found this data first published by: Barry Boehm in his 1980-1981 book “Software Engineering Economics.” In fact it may go back even further to “IEEE Transactions on Computers” written in 1976 by Boehm.

See http://books.google.com/books?id=uE4FGFOHs2EC&pg=PA220&lpg=PA220&dq=Software+Engineering+Economics+Boehm+cost+Defects&source=bl&ots=OKXItE1t1r&sig=_H7F7dG57Hy7iBvidOKJ726wmLc&hl=en&ei=9IYRSpqhF6Cc8QThpKGiBg&sa=X&oi=book_result&ct=result&resnum=2#PPA220,M1

LikeLike

The equating of cost to time-to-find-defect, as stated above, works before the software is shipped or deployed.

However, once software is deployed, defect costs may explode due to cost of support, cost of upgrade cycle, reputation and opportunity cost if your software is truly buggy, etc. If your software is embedded in another product (e.g. a gas pump), then costs of remediation can skyrocket. And if your software is mission critical, then the defects can be astonishingly expensive (e.g. crashed and destroyed Mars lander)

During the dev cyle there is sometimes a geometric increase in cost due to building on software that has to later be rewritten. Depending on the nature of the defect, cost to fix can be trivial, massive, or anywhere in between.

To your recommendation, yes, test early and test often is the best way to minimize overall development and maintenance cost. This includes unit and regression testing, as well as ad-hoc and performance/stress testing.

The biggest quality- and cost-related mistakes we see dev teams make are:

– no or weak test plan or consideration of how to test during the design phase

– no or weak unit testing, leading to a house of cards when software is integrated

– no or weak or late in cycle regression testing, leading to regressions (defects where the software worked previously) that are not found immediately after builds, leading in turn to the issues in the original article above

– no or weak or late in cycle automation of regression testing, leading to slow and expensive regression testing

– stress testing and performance testing as an afterthought, if done at all, leading to last-minute and often substantial schedule delays (if done late) or field problems on deployment (if not done at all)

– inadequate or non-existent defect tracking, measurement, and communication tools and processes

– failure to set quality targets and metrics at the start of the project, leading to last-minute, often poor decisions under pressure to release without the quality metrics or targets to support the decisions

It gets down to thinking about and planning for quality from the start and all the way through, putting the right processes and tools in place to support quality engineering, and instilling the passion for quality in the entire project team.

Gordon

LikeLike

Nope, don’t agree with your conclusion, if the horizontal axis was time vs. phase.

You’re completely ignoring the hierarchical nature of engineering artifacts.

Is a defect in code a simple code mistake, or correct code based upon a defect in the architecture/design, or correct code from correct design but based upon incorrect requirements?

If I discover a defect in coding (which clearly can’t occur until after some requirements are at least roughly written), what artifacts do I change/review?

Regardless of the title you put on the metric, the more “complete” a system is, the more expensive it is to fix something that’s broken AND to ensure that nothing else is also broken.

LikeLike

Dennis,

Thanks for the comment.

Let me challenge your thinking for a second to ensure you are approaching this from an agile, iteration-oriented mindset. To most, a defect is a defect. It does not matter whether it is “a simple code mistake, … or a defect in the architecture/design, … or based upon incorrect requirements.” The end customer does not care, so why make a distinction?

If your project is such that you can get requirements, design, build, test and refine rapidly, you don’t have to think in terms of artifacts. The final product is the only artifact that matters. It is also the one from which testing can be conducted and the customer or end user can most directly give feedback. If we start with key tenants of agile development we can build and test and get feedback on the actual product quickly. This is much better than doing it on an artifact (like a design specification) and will ensure we get the PRODUCT right more quickly.

I’m not saying that changing words in a specification is as difficult as in final code. I’m saying that as I spend more time getting ‘artifacts’ correct without proper feedback and testing, the more likely I am to create errors. The more development the product has been though, the more features, interdependencies and commitments that are likely made. These are the things that make defects difficult to fix, not the artifact in which it is fixed. The more time that has been invested in the product, whether in design or iterative build, the more I’m likely to incur large repair costs when that fix is made. The better my feedback, the less likely I am to have errors late in development. This is especially true if testing is difficult or requirements are hard for users to envision and articulate.

LikeLike

Jon, I don’t think the assertion that “a defect is a defect” really holds up under more analysis. It does matter where the defect occurs because re-thinking a concept can be done in a day. Re-implementing that concept could take staff-months. And refactoring in an agile environment is not free – not for a system of any complexity. If the architectural basis of the system is stable and well-thought out and implemented, then the impact of changes is minimized (but still not free). If it is not, major risk is introduced into the software system. And let’s not forget the industry standard value for error injection – about 25% if I recall. That means that someone fixing a bug has a 1 in 4 chance of injecting another bug during the process.

LikeLike

George, While I agree with your points, I’m not sure I suggested all defects are created equally. And, I’m not suggesting that there is no need for getting a concept right. The debate should focus around whether the best way to find out what is needed is through the paper deliverables of waterfall, or through a minimal viable product that generates real-world customer feedback. With a simple enough product, an agile team could conceivably throw away the initial/feedback product and start over with the right concept in less time than waterfall would take in attempting to “get it right” the first time. I’ve seen initial products launched on a wordpress blog with email ordering to generate data around critical decision points.

Test driven development, continuous integration, paired programming and modular design with timely refactoring are among development techniques to help make changing code more agile and less error prone, but I was not focused on those here.

Hope that helps. -Jon

LikeLike

Thanks for this write-up! What you have shown me is that the way in which you perceive a problem, state the problem or state observations can lead to conclusions based on what you desire (perceive) a solution to be, and can limit your options.

The statement “The cost of correcting a defect rises exponentially with the time taken to identify the defect.” is much crisper and shows that the two different methodologies (call them disciplined waterfall vs. agile) are approaching the same problem along different dimensions. One, but investing heavily up front to prevent to get things right and prevent downstream defects in later phases. The other by getting early feedback on a delivered system (either internally or externally to a customer) and therefore early discovery of defects that can be quickly resovled.

For me, the tension between these two approaches along this issue is now greatly reduced. In fact it shows that rather than a binary choice (disciplined or Agile), there could potentially be a whole range of solutions to explore based on this two-diemnsional plane. Finding the proper balance (and adjusting the balance over the project lifetime) according to the specific project you are working would seem wise.

LikeLike

John,

Thanks for the note and your insightful analysis. And, I agree that a balanced approach is a viable alternative. I think it really depends mainly on how unique is the domain where you are working. If you know what customers want or are recreating what’s been done before, disciplined waterfall is not a bad option. If you are doing something new or are unsure of wants, disciplined agile will likely give better results.

-Jon

LikeLike

The good thing is that there is no dearth of

professional software services providers. All these things are important in

getting software developed in a well-customized way.

For further enrichment of projects and application, software development company

make use of online conferencing services, learning tools

, e commerce , catalogs and databases etc.

LikeLike